A Comparative Framework

In this article I present a rigorous comparison between two current paradigms in AI-assisted software development: vibe-coding and spec-driven development (SDD) using Spec Kit. I analyse their defining features, advantages and limitations, and illustrate real-life examples in order to make their practical differences clear. This is especially relevant for research and professional contexts where the interplay of generative AI, software engineering workflows, and organisational governance matters.

Definitions and Core Characteristics

Vibe-Coding

Vibe-coding is a coding methodology in which a developer (or non-developer) uses a natural-language prompt (often paired with a large language model or coding assistant) to generate code, relying significantly on the generative AI system to produce working code, often with minimal upfront specification or manual code-writing.

Key attributes:

- The human primarily describes what they want (“build a photo-sharing app”, “add user login”) and the AI produces code.

- Minimal or informal specification; often emergent structure.

- Rapid prototyping, experimentation, exploration of ideas.

- The user may accept large portions of generated code without deep manual review (in the pure form).

- Lower barrier of entry—especially useful for non-programmers or fast early-stage prototypes.

- But correspondingly higher risk of maintainability, architectural drift, hidden errors.

Spec-Driven Development via Spec Kit

Spec-Driven Development (SDD) is a workflow in which requirements, architecture, and tasks are formalised as executable or version-controlled specifications prior to or in parallel with code generation. The toolkit Spec Kit (from GitHub) provides scaffolding, templates and commands to support this process.

Here is a detailed article on Spec Kit from Microsoft´s Den Delimarsky.

Key attributes:

- Specification files (e.g., spec.md, plan.md, tasks/) become the source of truth.

- Workflow: define specification → plan architecture/stack → break into tasks → execute with AI + human collaboration.

- AI-coding agents are used, but within a structural frame: human intention is captured, reviewed, versioned.

- Emphasis on alignment of intent, architecture, maintainability, clarity, and avoiding “AI goes off in weird directions”.

Comparative Table

| Dimension | Vibe-Coding | Spec-Driven Development (Spec Kit) |

|---|---|---|

| Entry point | Prompt and generate code directly | Create spec → plan → tasks before heavy code generation |

| Human role | Guide via natural language, iterate code via AI, often minimal manual code | Define intent, architecture, break down tasks; oversee AI-generated code |

| Structure/formality | Low upfront structure; flexible, emergent | High upfront or parallel structure; specification becomes artefact |

| Speed vs precision trade-off | Very fast prototyping; lower guarantee of correctness or long-term maintainability | Slightly slower (due to upfront work) but better guarantee of alignment, maintainability |

| Best suited for | Ideation, prototypes, “throwaway” apps, single-developer experiments | Critical production apps, team-based development, feature roll-outs, long-lived systems |

| Risks | Hidden architectural debt, unclear ownership, security/maintainability issues | Over-engineering, possible rigidity, slower start, overhead of specification artefacts |

| AI role | Often dominant: “let the model write code, I review/execute” | AI is agentic but controlled: “I give a spec; AI executes within defined constraints” |

| Governance/traceability | Weak traceability, code may diverge from intent or be “black-boxed” | Stronger traceability: spec and code aligned, version-controlled, discovered dependencies and decisions captured |

Real-Life Examples Illustrating Each Approach

Example 1: A weekend side-project – “Photo-sharing web-app”

- Vibe-coding scenario: A designer wants to build a simple web app that lets friends upload photos and comment; they open an AI-coding assistant and type: “Build me a photo-sharing app with sign-up, upload, comment, show feed.” The AI scaffolds the app (frontend + backend) immediately. The designer iterates by saying “make the feed grid three columns”, “allow likes”, “use Material UI style”. The app works quickly in sandbox. Because speed is priority, architecture, test coverage, maintainability are de-emphasised.

- Spec-Driven scenario: A startup wants the same photo-sharing app for internal employees. They first create spec.md describing goals: “Employees upload photos; images must be stored in encrypted form; only employees with active directory status may comment; must integrate with SSO; feed must support pagination and 50k users.” Then plan.md defines stack: React 18 + Node.js + PostgreSQL + AWS S3 + Cognito. tasks/ breaks down user stories: T001: user sign-up via SSO; T002: upload endpoint; T003: image storage with encryption; T004: feed with pagination; T005: test coverage; T006: security audit. The AI agents are then used to generate boilerplate, code stubs, tests, but all within the defined tasks. The result is slower to get working, but aligned with governance, security, maintenance.

Example 2: Feature addition in existing codebase

- Vibe-coding scenario: A product manager asks the AI: “Add dark-mode toggle to our React app.” The AI generates the code changes, modifies CSS, leverages context API, and pushes commit. The developer tests it lightly and merges. Because it’s small and straightforward, vibe-coding works fine.

- Spec-Driven scenario: The engineering team is using Spec Kit for their codebase. For adding dark-mode toggle, they create a spec: “Feature: dark-mode toggle. Must persist user setting, integrate with theme provider, support default system preference, must not break accessibility contrast (WCAG AA)”. Then plan: “Use MUI themeProvider; store preference in localStorage and sync with server; manage CSS variables for colors; add unit & e2e tests.” Tasks generated: T101: theme‐context implementation; T102: localStorage persistence & api; T103: UI toggle component; T104: tests for contrast; T105: documentation update. The AI assists with code generation, but the human reviews all generated tasks and ensures alignment. This approach better manages complexity, maintainability and collaboration.

Example 3: Rapid prototyping vs production rollout

- Vibe-coding: A researcher builds a data-visualisation dashboard using an LLM prompt: “give me a Streamlit app reading a CSV, produce interactive charts, add filters for year and category.” The developer iterates until the visualisations look fine. Because the tool is for internal use only, and speed matters, vibe-coding is acceptable.

- Spec-Driven: A fintech company builds a customer-facing dashboard that must comply with PCI-DSS and GDPR. They decide to adopt Spec Kit so that specs capture compliance requirements, architecture decisions, tasks for logging, encryption, audit trails. The AI generates code but only after formal tasks and reviews. This mitigates risk and ensures regulatory compliance.

Implications for Business, Teams and Academics

For businesses and engineering teams

- Vibe-coding offers a compelling route for speed and innovation, enabling rapid prototype creation, experimentation, MVPs, and enabling non-engineering teams (e.g., product, marketing) to iterate quickly.

- But for mission-critical systems, regulated industries, teams with long-term maintenance expectations, or multi-developer collaboration, the spec-driven approach provides stronger guarantees around alignment, traceability, code quality, and governance.

- Organisations should consider hybrid strategies: use vibe-coding for early prototyping or low-risk features, and spec-driven workflows for production modules.

- Governance and risk-management: Researchers report significant security risks with vibe-coding—e.g., AI-generated code with vulnerabilities, hidden dependencies, poorly understood logic. Spec-driven development helps mitigate these risks by re-introducing specification, review, version control, and human-in-the-loop validation.

- Team roles and skills: Vibe-coding shifts the human role more toward “prompting, reviewing, iterating”, whereas spec-driven emphasises “designing intent, architecture, structuring tasks, reviewing AI output”. Thus the required competencies differ.

For academic research and IS/SE discourse

- Vibe-coding represents a novel paradigm of intent mediation, where humans translate goals into prompts and AI translates prompts into code—essentially a shift in who writes code and how the process is mediated.

- Cleaning the notion: While hype often frames vibe-coding as “just talk and AI codes”, research underscores that expertise is still required (for review, debugging, architecture) and that the trade-offs (speed vs maintainability) must be empirically studied.

- Spec-driven development with toolkits like Spec Kit invites research into how specification artefacts (spec.md, plan.md) evolve, how AI agents interpret them, how version control and human-AI hand-off work in practice.

- In psychology/UX research: There is interesting human-AI co-creative flow (“flow” states when humans and AI collaborate seamlessly in vibe-coding) versus structured workflows in spec-driven contexts. This raises questions about cognitive load, trust in AI, error detection, and team dynamics.

When to Use Which Approach? (A Decision Guide)

Here is a heuristic decision guide:

- Use Vibe-Coding when:

- The project is a quick prototype or proof of concept.

- The cost of failure is low (e.g., internal tool, non-critical feature).

- Speed and iteration matter more than long-term maintenance.

- The team is small (possibly one person) and architectural risk is low.

- Use Spec-Driven Development when:

- You are building production-grade systems with long-term support.

- Multiple developers and stakeholders are involved (team/organisation).

- You must meet regulatory, security, architectural or maintainability requirements.

- You want traceability of decisions, version-controlled specifications, clear tasks and code-review workflows.

- Hybrid Approach:

- Use vibe-coding for early explorations, then migrate to spec-driven when the idea proves promising and you scale it.

- Use spec-driven approach for the core architecture; allow vibe-coding for peripheral or non-critical modules.

- Manage the boundary: clearly define which parts are prototyped with vibe and which go spec-driven to avoid technical debt.

Empirical Research on Vibe-Coding

Key studies

- Vibe-coding: programming through conversation with LLMs (Sarkar, 2025) presents the first empirical study of vibe-coding: 8.5 hours of “think-aloud” video recordings of developers interacting with LLMs in a prompt-centric workflow. The authors document how developers form goals, delegate to the model, revise outputs, and manage epiphenomena such as prompt‐engineering and iterative refinements.

- One key finding: “vibe-coding represents a meaningful evolution of traditional AI-assisted programming”.

- The authors emphasise that their sample was of expert users; they “have not studied non-experts.”

- Good Vibrations? A Qualitative Study of Co‑Creation, Communication, Flow, and Trust in Vibe-Coding (Pimenova et al., 2025) uses a large qualitative data set (>190 000 words from interviews, Reddit posts, LinkedIn) to derive a grounded theory of how developers experience vibe-coding: e.g., how trust in the AI influences the degree of delegation vs collaboration, how “flow” is sustained, and what breakdowns (e.g., debugging burden) occur.

- They identify recurring pain points in specification, reliability, debugging, latency, review burden, and collaboration.

- Vibe-Coding in Practice: Motivations, Challenges, and a Future Outlook (Fawz, Tahir, Blincoe, 2025) is a grey-literature systematic review of 101 practitioner sources (blogs, posts) with 518 behavioural accounts of vibe-coding. They report a “speed-quality trade-off paradox”: rapid prototyping achieved, but perceived code quality and QA often weak.

- They argue: “vibe-coding lowers barriers and accelerates prototyping, but at the cost of reliability and maintainability.”

- There is also the Measuring the Impact of Early‑2025 AI on Experienced Open‑Source Developers study (Metr blog/field trial): an RCT showing that experienced open-source developers working on familiar codebases took 19% longer when using an AI coding assistant, compared to no assistance.

- This challenges the assumption that AI (and by extension vibe-coding workflows) automatically increase productivity.

Empirical findings summary

From the studies above we can summarise key findings:

- Vibe-coding can enhance developer experience (flow, creativity, speed) especially in ideation or prototyping phases (Pimenova et al., 2025).

- However, it introduces significant risk factors for code quality, maintainability, review overhead and debugging burden (Fawzy et al., 2025; Sarkar, 2025).

- In production-calibre, familiar codebases, or experienced developers, AI assistance may slow down rather than speed up workflows (Metr RCT, 2025).

- There is a strong dependence on developer expertise and ability to review/understand AI‐generated code (Sarkar, 2025).

- The literature repeatedly emphasises that “the human still needs to be in the loop” (Pimenova et al., 2025; Fawz et al., 2025) and that code generated by LLMs is not inherently trustworthy or self-validating.

Gaps and limitations

- Few large-scale quantitative studies exist comparing vibe-coding vs traditional/manual workflows in controlled settings, especially across varying levels of developer expertise, codebase familiarity, organisational contexts.

- Most studies focus on expert users; very little is known about non-expert users, cross-role adopters (e.g., product managers, designers) or low-risk prototyping contexts.

- Long-term outcomes (maintenance cost, technical debt accumulation, team collaboration over time) are under-explored.

- Metrics for “code quality” or “maintainability” in AI-generated code remain under-defined and empirically under-tested.

- Comparative empirical work on spec-driven workflows (versus vibe-coding) is minimal.

Empirical / Observational Research on Spec-Driven Development (with AI)

Key sources

- Spec Kit (GitHub open-source toolkit) is described in a blog by GitHub: “Spec-driven development with AI … an open‐source toolkit for structuring specification-first workflows”.

- The article titled GitHub Open Sources Kit for Spec‑Driven AI Development (Ramel, 2025) describes the launch of Spec Kit by GitHub and outlines its design: templates, CLI, prompts, specification-first process.

- Additional observational commentary: the blog piece on “Maintaining small codebases with spec-driven development” (Continue Dev blog) describes a personal experiment of converting from minimal spec to spec-driven incremental generation.

What can we say about empirical evidence?

- The research literature (peer-reviewed empirical studies) on spec-driven development in AI-coding workflows appears very limited. I was not able to locate a full-scale empirical RCT or large sample study comparing spec-driven vs traditional or vibe-coding in real teams with metrics.

- The GitHub and Continue blog posts are practitioner-oriented rather than academic, offering anecdotal evidence of benefits such as clearer intent, better alignment, version‐controlled specs etc. (See GitHub blog: “Instead of code is the source of truth -> intent is the source of truth.”)

- The blog “Maintaining small codebases with spec-driven development” describes how a developer moved from prompt-only to spec-first, found improved structure, easier modifications, but this is a single‐case narrative.

- One practical advantage observed: spec-driven workflows help aligning architecture, improving governance, and reducing drift when using AI agents. (As per GitHub’s description.)

Gaps and limitations

- Lack of peer-reviewed quantitative evidence of spec-driven approach outcomes: e.g., impact on defect rate, maintainability metrics, team collaboration, speed.

- Few comparative studies evaluating spec-driven vs vibe‐coding vs traditional coding side‐by‐side.

- Limited insights into how teams adopt spec-driven workflows practically: what devs learn, what organisational changes are required, what new skill sets emerge.

- Unknowns around scalability: how spec-driven process performs in large teams, distributed projects, legacy codebases.

- Also, as with vibe-coding, “code quality” and “team productivity” metrics specific to AI‐generated code remain understudied.

Critical Commentary & Research Implications

Theoretical implications

- Vibe-coding suggests a shift in locus of work: from “developer writes code manually” → “developer prompts and reviews AI”. This raises questions about cognitive workload, skills required, trust/verification processes, and human–AI collaboration models. (Pimenova et al., 2025)

- Spec-driven development suggests a further shift: intent/specification becomes the central artefact, with the coding agent executing tasks. Here, the challenge is how to translate human intent into spec, how to verify AI output, how to maintain chain of traceability. (GitHub blog)

- Both paradigms raise governance, auditability, maintainability challenges unanswered in classic software-engineering literature.

Practical implications

- Teams adopting vibe-coding may gain speed in ideation and prototyping, but may incur hidden costs in maintenance, review, debugging, and architectural drift. The grey-literature review (Fawz et al., 2025) highlights this.

- Adoption of spec-driven workflows may require an investment in tooling, organisational change (defining specs, task breakdowns, oversight), but may yield better control, alignments and long-term robustness.

- Given the RCT finding that experienced devs slowed down with AI assistance in familiar contexts (Metr, 2025), it suggests context matters: workflow, dev expertise, novelty of codebase, tool integration all moderate outcomes.

Research directions

Here are promising future research questions and directions:

- Quantitative longitudinal studies comparing vibe-coding, spec-driven, and traditional workflows on key metrics: productivity (time to feature), defect rate, maintainability (lines of churn, bug backlog), and developer experience (satisfaction, cognitive load).

- Team-level studies: how adoption of AI-coding paradigms affects collaboration, division of labour, code review practices, technical debt accumulation, knowledge transfer.

- Skill-development studies: what skills do developers need in each paradigm (prompt engineering, spec writing, review of AI-generated code), and how these shift entry-level vs senior dev roles.

- Tool/agent infrastructure research: how tooling (LLMs, prompts, spec templates, code‐generation pipelines) influences outcomes. What spec formats work best (Markdown, YAML, DSL)? How version control of spec + code interplay?

- Ethics, governance, security: systematic empirical evaluation of vulnerabilities, auditability, traceability in AI-generated code in both vibe and spec-driven workflows.

- Context‐sensitivity: how workload, domain complexity, codebase familiarity, team size moderate the trade‐offs of each paradigm.

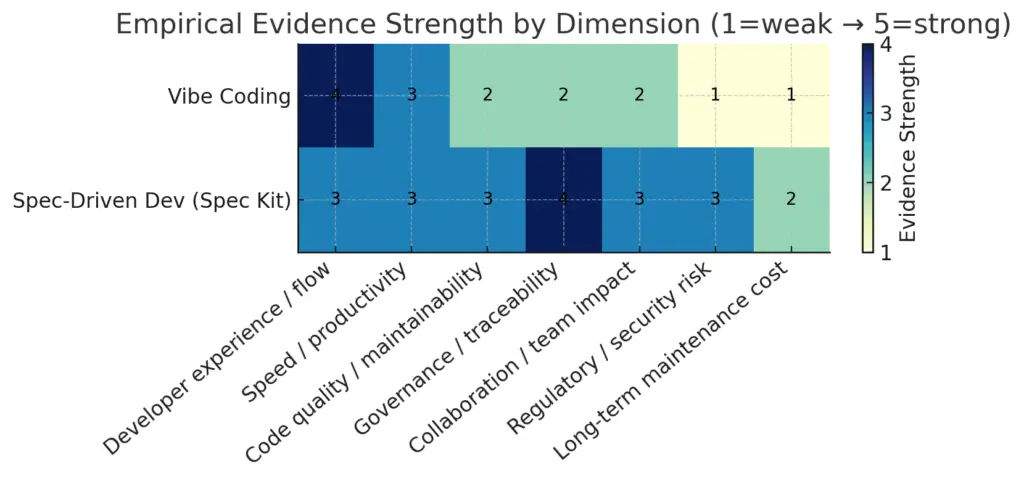

Synthesis of empirical evidence by dimension

| Dimension | Evidence Strength (1 = weak → 5 = strong) | Findings Summary |

| Developer experience / flow | 4 | Vibe-coding promotes creativity and flow states (Pimenova et al., 2025); spec-driven data limited. |

| Speed / productivity | 3 | Vibe-coding fast for ideation but not necessarily overall (Metr, 2025); spec-driven slower start but more consistent delivery (Continue Dev). |

| Code quality / maintainability | 2 | Often degrades under vibe-coding due to lack of specs; spec-driven claims improvement but evidence anecdotal. |

| Governance / traceability | 2 → 3 | Vibe-coding weak; spec-driven strong conceptually but no quantitative validation. |

| Collaboration / team impact | 2 | Mainly qualitative observations; no large team trials yet. |

| Regulatory / security risk | 1 | Discussed theoretically only (itpro.com 2025). |

| Long-term maintenance cost | 1 | No longitudinal studies yet for either paradigm. |

The heatmap shows that evidence is strongest for developer experience and speed in vibe-coding, and for governance, traceability, and structured workflows in spec-driven development—while both remain under-researched in long-term maintenance and security dimensions.

Summary

In sum: vibe-coding and spec-driven development represent two ends of a spectrum in AI-assisted software development.

- Vibe-coding emphasises speed, experimentation, minimal upfront formalism, and relies on the AI’s generative capacity—but at the cost of structure, traceability, and often maintainability.

- Spec-driven development (exemplified by Spec Kit) emphasises intent, architecture, tasks, versioned specification, and builds a more robust workflow around AI-assisted code generation—as appropriate for production, long-lived systems.

For practitioners and researchers, the key is not to treat one as universally “better” but to select the appropriate paradigm based on risk profile, team size, longevity of system, regulatory context, and maintainability expectations. Equally important is to recognise the emerging research questions: how human-AI workflows shift developer roles, how specification artefacts evolve in AI-augmented development, and how we measure quality, trust, and governance in these new coding paradigms.