Responsible/Academic Use Series

Introduction: From AI Policing to AI Partnership

Universities and schools are realizing that trying to ban generative AI is like banning calculators in math: short-lived and ultimately unhelpful. Instead, the challenge is to design assessments that remain meaningful—even when students have access to ChatGPT, Claude, or Gemini.

The emerging response: AI-resistant by design, not by surveillance. The focus shifts from “catching misuse” to guiding responsible use through authentic, traceable, and iterative learning processes.

1. Authentic Assessment: Real Tasks, Not Text Outputs

Authentic assessments mirror the complexity and context of professional or research tasks. They make it harder—and less meaningful—for AI to simply produce a one-shot “answer.”

Examples of Authentic AI-Resistant Formats

- Case portfolios: Students build a portfolio around a real-world challenge, integrating field data, reflections, and stakeholder feedback.

- Dataset critiques: Instead of “write a report,” ask students to analyze the bias or limitations of a dataset, document sources, and justify preprocessing choices.

- Simulation briefs: Use workplace or policy scenarios that require judgment under context, such as writing a communication plan for a specific audience or constraint.

These formats emphasize judgment, synthesis, and process, which are exactly the human dimensions of scholarship that AI cannot yet fully reproduce.

2. Iterative Supervision: Process Over Product

AI makes “final products” instantly reproducible. What’s harder to fake is the evolution of understanding over time.

This is where iterative supervision—checkpoints, drafts, supervision logs, or reflection notes—becomes the cornerstone of integrity.

Assignment Patterns That Work

- Process Logs: Students maintain weekly logs showing prompts used, feedback cycles, and decisions taken. Educators review how the student interacted with AI tools, not just the outcome.

- Oral Defenses (“Viva-lite”): After submission, brief 5–10 minute oral reviews ask:“Explain your reasoning behind X.”“What did the model suggest, and why did you reject it?”These moments reveal authentic ownership.

- AI-use Disclosure Sheets: A standardized section at the end of each assignment detailing if, where, and how AI tools were used — aligned with institutional policy.

3. Designing for Transparency, Not Fear

The goal is not to trap students but to normalize transparent, responsible use.

Institutions like ETH Zürich, UCL, and KU Leuven are piloting disclosure templates where students identify:

- which generative tools were used,

- for what task (e.g., brainstorming, proofreading, coding),

- and how they verified factual accuracy.

This shifts the conversation from suspicion to accountability and literacy.

4. Rubrics That Recognize Process

Traditional rubrics reward polish. Future-proof rubrics reward traceability, reflection, and originality of judgment.

Rubric Example Snippet

| Criterion | Excellent (A) | Satisfactory (B–C) | Needs Improvement (D–E) |

|---|---|---|---|

| AI Disclosure | Full transparency, detailed process log, clear rationale | Basic mention of tools used | No disclosure or unclear |

| Iteration & Reflection | Multiple evidence of drafts, supervisor feedback integrated | Some iteration shown | Single output, no iteration |

| Critical Evaluation of AI Outputs | Evaluates AI suggestions critically with evidence | Accepts AI outputs with limited questioning | Uncritical acceptance or misuse |

5. The Shift from Policing to Pedagogy

This transition requires reframing the educator’s role—from AI-detective to AI-mentor.

- Replace “zero-AI” policies with “responsible-AI use” frameworks.

- Train supervisors to ask better questions, not just to spot plagiarism.

- Reward critical engagement with AI, not abstinence.

As one instructor put it:

“We stopped asking ‘Did you use ChatGPT?’ and started asking ‘How did ChatGPT shape your reasoning?’”

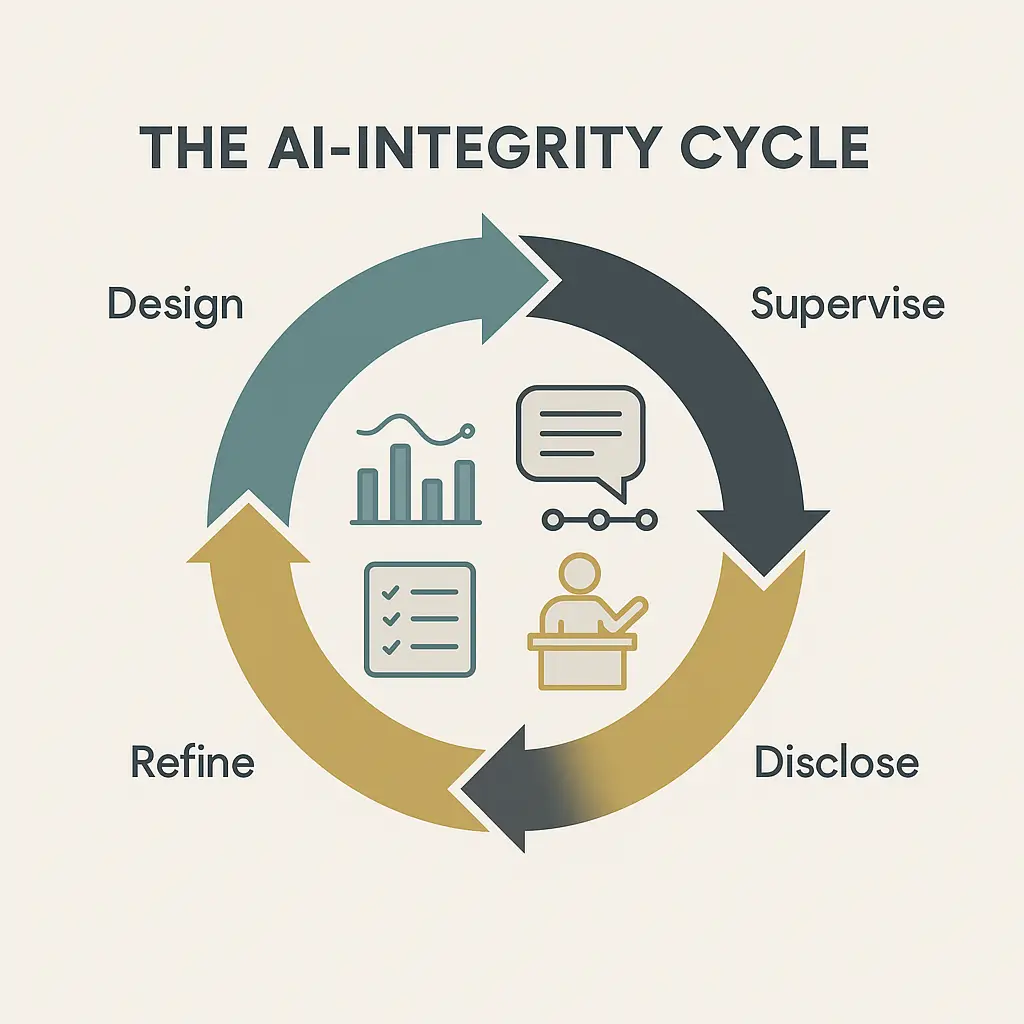

6. Moving Forward: Building the AI-Integrity Loop

An AI-resistant assessment ecosystem is not static—it’s iterative, much like the learning process itself:

- Design authentic tasks → 2. Supervise process iteratively →

- Collect transparent disclosures → 4. Refine rubrics & training

This loop aligns with the UNESCO AI Competency Framework (2023) and EU’s “Ethics in Higher Education” guidelines, emphasizing transparency, accountability, and critical digital literacy.

Conclusion: Designing for Integrity, Not Detection

AI-resilient education does not mean blocking AI—it means designing for human reasoning, reflection, and ownershipto remain visible.

By embedding authentic tasks, iterative feedback, and transparent AI use, institutions move from control to competence—a far stronger foundation for academic integrity in the GenAI era